June 2024 - Papers on Agents, Fine-tuning and reasoning

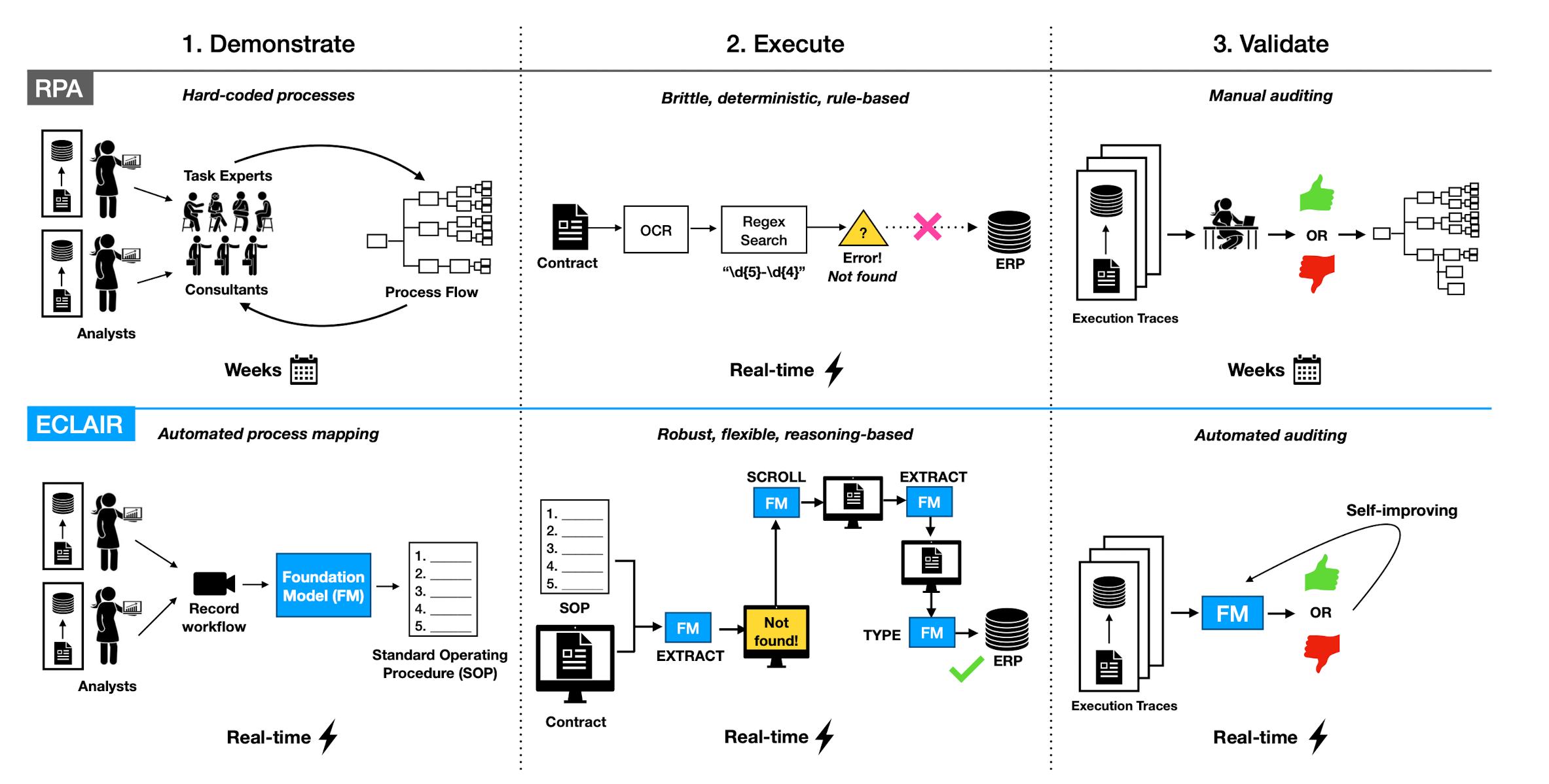

What’s included Multi-Agent RL for Adaptive UIs Is ‘Programming by Example’ (PBE) solved by LLM’s Learning Iterative Reasoning through Energy Diffusion LORA: Low-Rank Adaptation of LLMs Automating the Enterprise with Foundational Models MARLUI - Multi-Agent RL for Adaptive UI ACM Link: https://dl.acm.org/doi/10.1145/3661147 Paper: MARLUI Adaptive UIs Adaptive UIs - as opposed to regular UI’s, are UI’s that can actually adapt to the users needs. In this paper, the UI is adapting to optimize the number of clicks needed to reach the outcome....